Abstract

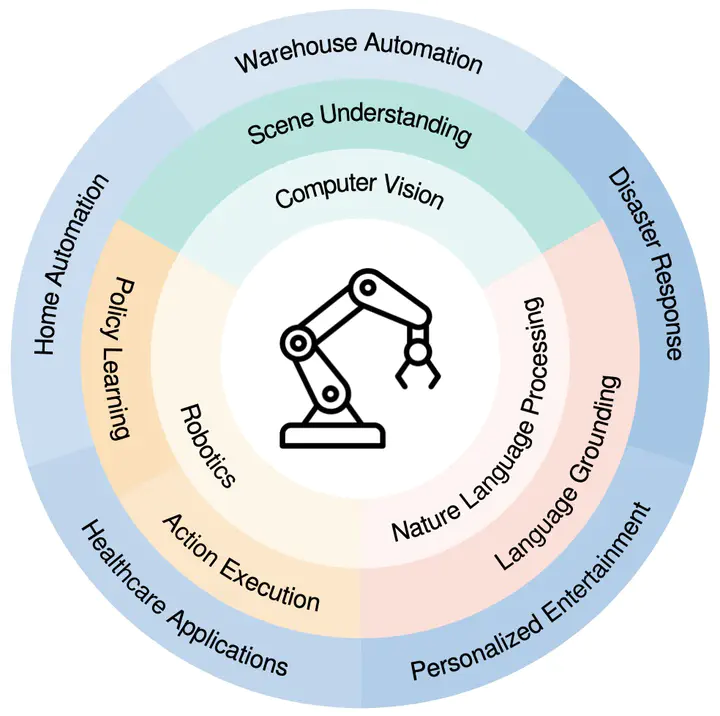

Language-conditioned robot manipulation is an emerging field aimed at enabling seamless communication and cooperation between humans and robotic agents by teaching robots to comprehend and execute instructions conveyed in natural language. This interdisciplinary area integrates scene understanding, language processing, and policy learning to bridge the gap between human instructions and robotic actions. In this comprehensive survey, we systematically explore recent advancements in language-conditioned robotic manipulation. We categorize existing methods into language-conditioned reward shaping, language-conditioned policy learning, neuro-symbolic artificial intelligence, and the utilization of foundational models (FMs) such as large language models (LLMs) and vision-language models (VLMs). Specifically, we analyze state-of-the-art techniques concerning semantic information extraction, environment and evaluation, auxiliary tasks, and task representation strategies. By conducting a comparative analysis, we highlight the strengths and limitations of current approaches in bridging language instructions with robot actions. Finally, we discuss open challenges and future research directions, focusing on potentially enhancing generalization capabilities and addressing safety issues in language-conditioned robot manipulators.